In this article, we’ll delve into a recent study conducted by the Tech Transparency Project (TTP) that sheds light on an alarming reality.

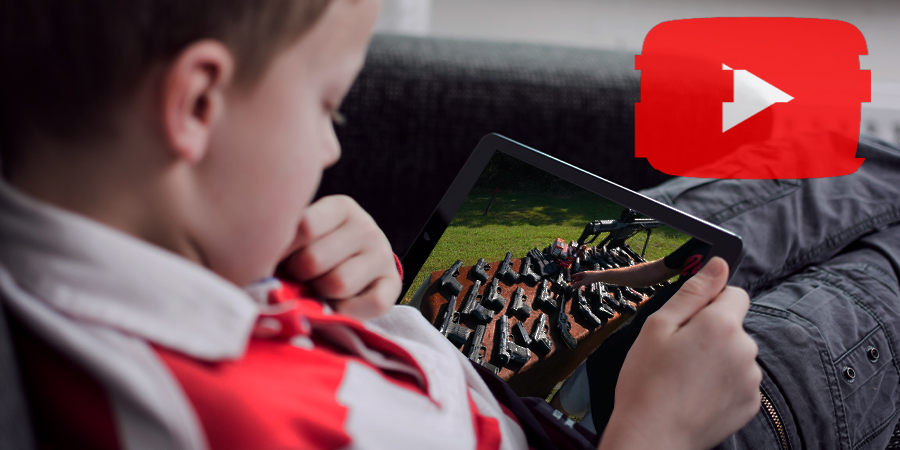

The research asserts that YouTube’s recommendation algorithm is leading children, particularly those with an interest in video games, towards graphic gun-related content, posing significant ethical and safety concerns.

Key Takeaways:

- TTP’s study highlights that YouTube’s recommendation system pushes gun-related content to young users, even those as young as nine.

- The recommended videos often violate YouTube’s own policies, including tutorials on weapon modifications and scenes of school shootings.

- YouTube defends its platform, emphasizing the importance of its in-app supervision tools, such as the YouTube Kids app.

- Concerns about social media platforms promoting harmful content, particularly to younger users, are not limited to YouTube; similar issues have been raised about TikTok.

The Unsettling Findings of TTP’s Study

In a world where digital platforms have become the new playground, the Tech Transparency Project (TTP) has stumbled upon a disturbing revelation.

A study conducted by this nonprofit watchdog group recently unmasked a grim reality – YouTube’s recommendation system is veering children towards content that glorifies guns and violence.

What’s more alarming is the targeted demographic: young boys, aged between 9 to 14, showing an interest in video games.

The study was conducted over a 30-day period, where four YouTube accounts were set up.

Two accounts simulated the behavior of 9-year-old boys, and the other two, 14-year-olds.

All of them shared a common interest: video games.

The findings were shocking, to say the least.

The YouTube algorithm began suggesting violent gun-related content to all these accounts, but the ones that interacted with these recommendations were flooded with an even higher volume of such videos.

Violation of YouTube’s Own Policies

Digging deeper, the TTP found that several videos suggested by the algorithm blatantly contravened YouTube’s guidelines.

Among the recommended content were videos of a minor firing a gun, and tutorials on transforming handguns into fully automatic weapons.

Scenes depicting school shootings and demonstrations of the harm guns can inflict on the human body were also served up to these ‘young’ accounts.

In many instances, these videos, despite being in clear violation of the platform’s policies, were monetized with ads, raising further ethical concerns.

YouTube’s Response and Defense

As the public outcry intensifies, YouTube has responded, pointing to its efforts to create a safer space for young users.

A spokesperson for the tech giant highlighted the YouTube Kids app and its in-app supervision tools, stating that they aim to provide a more secure experience for tweens and teens.

The company also expressed its openness to academic research on its recommendation systems and mentioned ongoing explorations to invite more researchers to examine these systems.

However, YouTube also pointed out the limitations in TTP’s study, such as the lack of context regarding the total number of videos recommended to the test accounts, and the absence of insights into how these accounts were set up, including whether YouTube’s Supervised Experiences tools were applied.

A Broader Concern: Social Media Platforms and Harmful Content

This issue extends beyond YouTube.

Other popular social media platforms like TikTok have also been under the scanner for promoting damaging content, particularly to younger audiences.

There’s an increasing need for these platforms to implement stringent content moderation and ensure the enforcement of their user policies.

The connection between social media, radicalization, and real-world violence cannot be ignored.

For instance, attackers behind several recent mass shootings have used social media platforms to glorify violence and even live-stream their horrendous acts.

As the digital age continues to evolve, the safety of our children in these online spaces is of paramount importance.

The TTP’s report serves as a wake-up call, a reminder that tech giants, regulatory bodies, and society as a whole need to step up their efforts to protect the most vulnerable among us from exposure to harmful content.

Conclusion

The recent study by TTP underscores the urgent need for more robust content moderation on YouTube and similar platforms. While YouTube has made attempts to create a safer environment for younger users, the findings suggest that more stringent measures are required. As technology continues to evolve, so should our efforts to protect vulnerable users, particularly children, from exposure to harmful and inappropriate content. The key takeaway from this research is that while platforms like YouTube may have user policies in place, their enforcement must be made more effective. It’s a call to action for tech giants, regulatory bodies, and society as a whole to ensure the digital safety of our children.

Sections of this topic

Sections of this topic